I was reading recently about some significant Python 3.11 performance improvements, and I was wondering whether Perl 5 still gets significant performance improvements on each version - even though it might be more mature, thus more optimized in the first place.

I thought I'd compare the final releases of alternating versions starting with 5.12.5 released 10 years ago, using a benchmark I made for a cloud vm comparison. As is the case with any benchmark, it might not be representative of your own workloads - it benchmarks things that are relevant to me and also some things that I would avoid, but are used by many modules and are notoriously slow (mainly DateTime and Moose). However, it is more representative of "real-life", with results that are not lost in noise, than say, PerlBench (which has a different purpose of course).

Here is the list of the tested Perl releases:

| Major Ver. | Release | Date |

| 5.12 | 5.12.5 | 2012-11-10 |

| 5.16 | 5.16.3 | 2013-03-11 |

| 5.20 | 5.20.3 | 2015-09-12 |

| 5.24 | 5.24.4 | 2018-04-14 |

| 5.28 | 5.28.3 | 2020-06-01 |

| 5.32 | 5.32.1 | 2021-01-23 |

| 5.36 | 5.36.0 | 2022-05-28 |

Performance on ARM64

I ran the benchmarks on an Apple M1, as I've found it's the fastest CPU type at running Perl (and not only) currently:

| Perl Version: | 5.12 | 5.16 | 5.20 | 5.24 | 5.28 | 5.32 | 5.36 |

| Astro: | 9.82 | 7.97 | 7.56 | 5.93 | 5.96 | 5.89 | 5.66 |

| BioPerl Codons: | 12.40 | 12.86 | 7.51 | 7.18 | 7.60 | 6.48 | 7.23 |

| BioPerl Monomers: | 8.91 | 9.38 | 7.03 | 7.39 | 7.88 | 7.16 | 7.10 |

| CSS::Inliner: | 9.77 | 9.34 | 8.83 | 7.98 | 7.52 | 7.59 | 7.75 |

| DateTime: | 2.96 | 2.88 | 2.81 | 2.44 | 2.40 | 2.38 | 2.37 |

| Digest: | 13.40 | 13.43 | 13.38 | 13.38 | 13.25 | 13.71 | 13.24 |

| HTML::FormatText: | 4.47* | 4.43 | 4.19 | 3.71 | 3.54 | 3.57 | 3.59 |

| Math::DCT: | 6.48 | 4.80 | 4.74 | 4.65 | 2.84 | 3.03 | 2.92 |

| Moose: | 4.72 | 4.84 | 4.53 | 3.80 | 3.72 | 3.77 | 3.72 |

| Primes: | 7.48 | 7.38 | 7.45 | 6.49 | 6.46 | 6.61 | 6.50 |

| Regex/Replace: | 6.68 | 5.71 | 5.60 | 5.29 | 5.22 | 4.08 | 4.13 |

| Regex/Replace utf8: | 10.77 | 10.97 | 9.23 | 8.50 | 9.81 | 9.91 | 9.36 |

| Test Moose: | 8.21 | 8.68 | 8.31 | 8.06 | 7.94 | 8.52 | 8.30 |

| Total time: | 107.02 | 103.22 | 91.50 | 84.97 | 84.16 | 83.71 | 82.29 |

*Malformed UTF-8 character warnings with perl 5.12.5

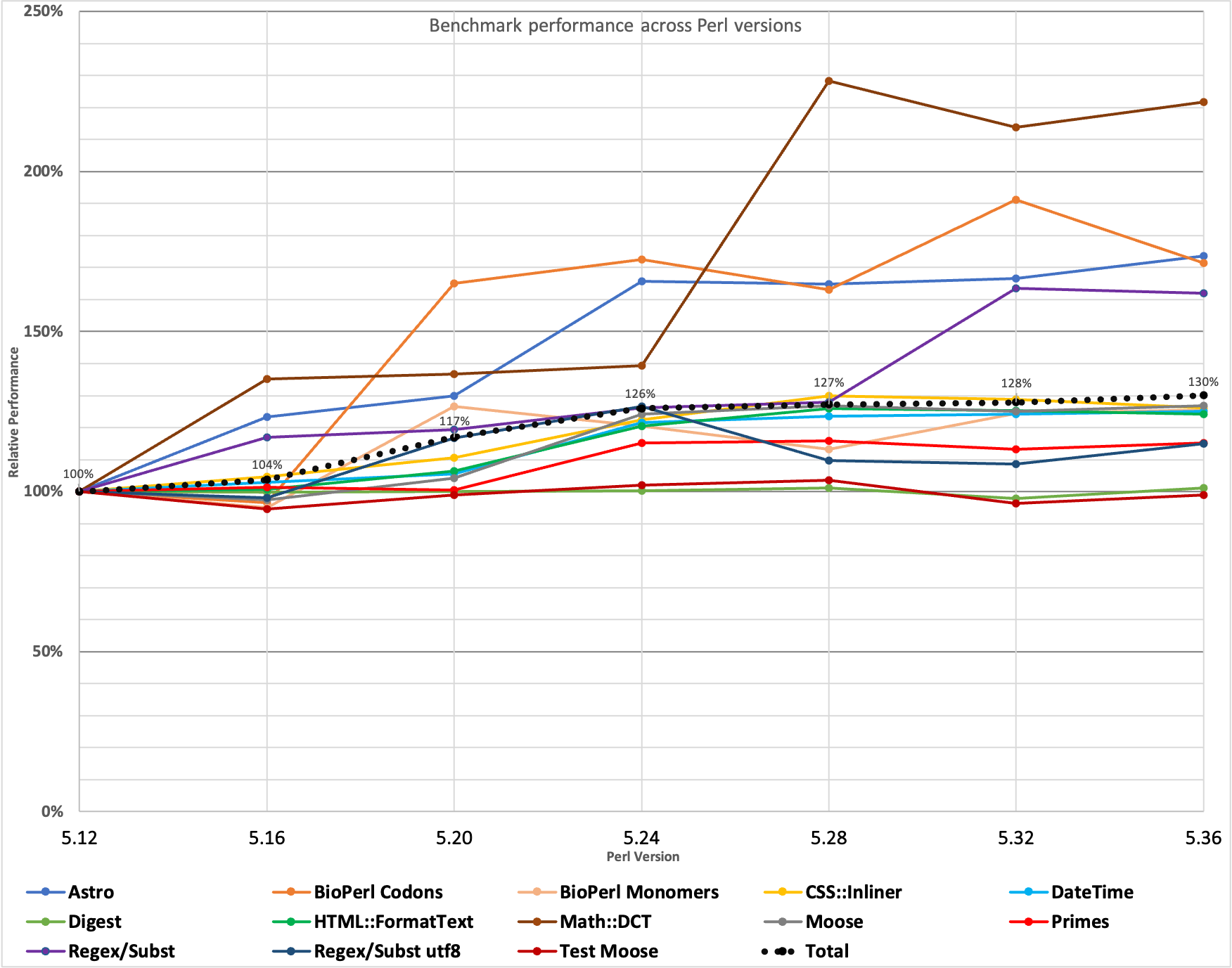

Or in a chart:

(click to open large version)

From the chart we see how until 5.24 we had some very generous gains, over 25% overall. After that, we had some smaller gains, it seems either there were no more easy gains due to the maturity of the code, or the focus was more on fixes/features.

It should be noted that from 5.12 to 5.24 there were quite a few improvements related to unicode, so e.g. the Regex/Subst UTF8 test which gained 49% in performance is probably also doing more work than before while being much faster.

I was very surprised to see the biggest gains where in an XS module (Math::DCT), so I had to profile that to see what was going on, and it turns out unpack was much slower in older Perl versions - the C code is running a the same speed as expected.

Performance on AMD64

While arm64 is gaining market share, it's not yet the most popular architecture, so I repeated on an AMD EPYC Milan (Google Cloud's n2d type) just to verify:

| Perl Version: | 5.12 | 5.16 | 5.20 | 5.24 | 5.28 | 5.32 | 5.36 |

| Astro: | 12.30 | 10.14 | 10.09 | 8.28 | 7.89 | 7.99 | 7.69 |

| BioPerl Codons: | 16.50 | 15.40 | 9.85 | 9.82 | 9.70 | 9.53 | 9.70 |

| BioPerl Monomers: | 11.87 | 12.52 | 9.81 | 9.79 | 9.54 | 9.13 | 9.35 |

| CSS::Inliner: | 16.50 | 15.54 | 15.06 | 14.06 | 13.14 | 13.46 | 13.54 |

| DateTime: | 5.56 | 5.51 | 5.45 | 5.04 | 4.86 | 4.96 | 4.97 |

| Digest: | 11.82 | 11.83 | 11.85 | 11.83 | 11.86 | 11.85 | 11.85 |

| HTML::FormatText: | 7.86 | 7.62 | 7.44 | 6.65 | 6.39 | 6.55 | 6.57 |

| Math::DCT: | 11.41 | 7.11 | 7.12 | 7.27 | 4.37 | 4.54 | 4.32 |

| Moose: | 8.22 | 8.29 | 7.62 | 6.45 | 6.28 | 6.34 | 6.18 |

| Primes: | 7.68 | 7.08 | 6.92 | 6.30 | 6.37 | 6.51 | 6.62 |

| Regex/Replace: | 8.89 | 8.42 | 7.13 | 6.55 | 6.03 | 4.63 | 5.21 |

| Regex/Replace utf8: | 17.24 | 15.64 | 11.46 | 10.04 | 11.16 | 10.56 | 10.76 |

| Test Moose: | 13.71 | 14.28 | 14.62 | 14.36 | 14.60 | 15.23 | 15.16 |

| Total time: | 149.60 | 139.87 | 125.07 | 117.05 | 112.80 | 111.69 | 111.99 |

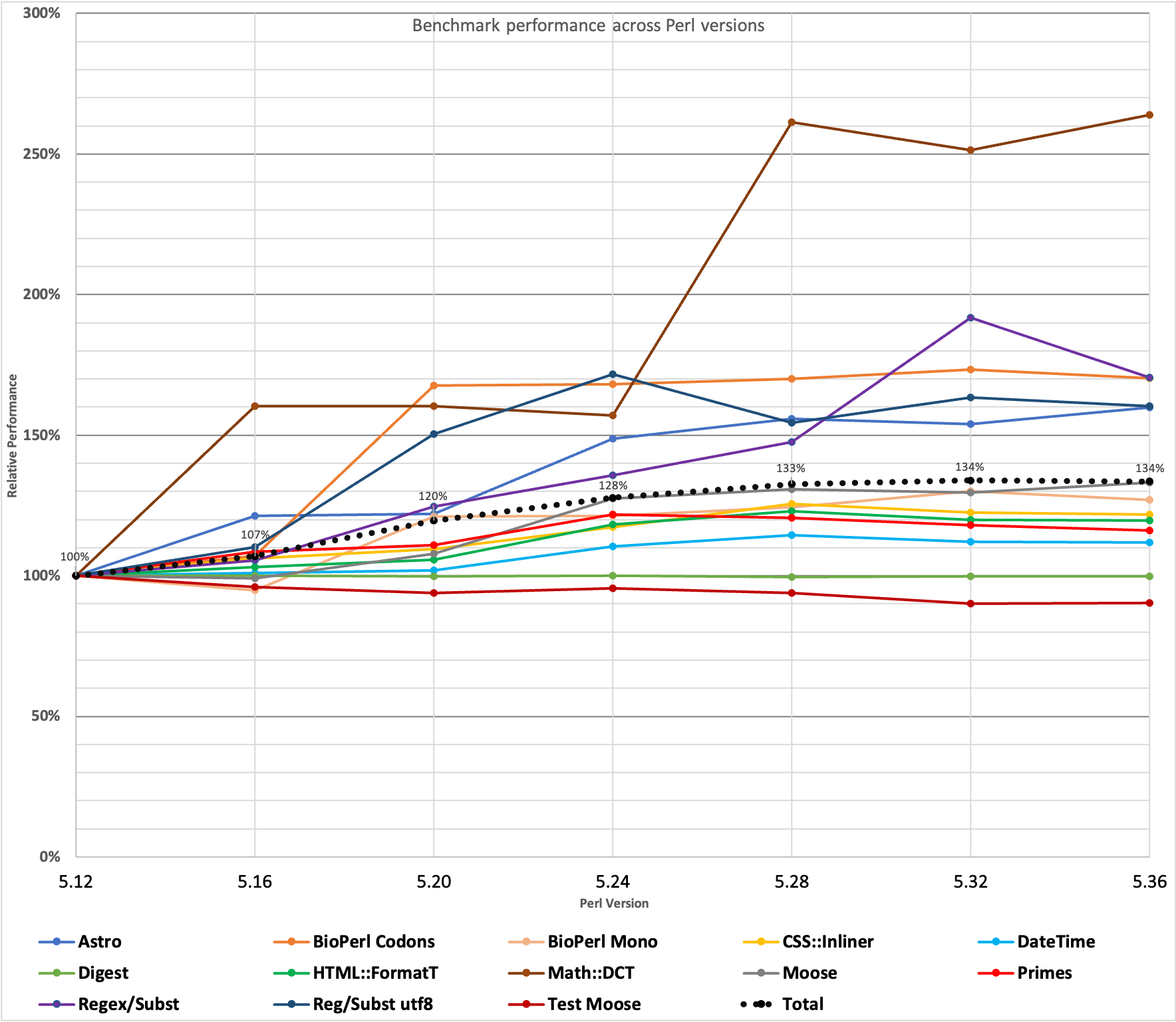

(click to open large version)

The results are not very different, except a small performance regression of the

Moose Tests. As that test is using

prove, most of the runtime is loading the interpreter and the modules for each test, if you want to speed that up by orders of magnitude, try using

Test2::Aggregate. Moose itself seems to have gained speed, but, of course if you really want to speed it up just switch away from Moose. Corinna is not ready (and not directly compatible), but there is

Mouse,

Moo, etc.

Conclusion

Here is a table with the average of the two architectures and 5.12 as the 100% base:

| Perl Version: | 5.12 | 5.16 | 5.20 | 5.24 | 5.28 | 5.32 | 5.36 |

| Astro | 100% | 122% | 126% | 157% | 160% | 160% | 167% |

| BioPerl Codons | 100% | 102% | 166% | 170% | 167% | 182% | 171% |

| BioPerl Mono | 100% | 95% | 124% | 121% | 119% | 127% | 126% |

| CSS::Inliner | 100% | 105% | 110% | 120% | 128% | 126% | 124% |

| DateTime | 100% | 102% | 104% | 116% | 119% | 118% | 118% |

| Digest | 100% | 100% | 100% | 100% | 100% | 99% | 100% |

| HTML::FormatT | 100% | 102% | 106% | 119% | 124% | 123% | 122% |

| Math::DCT | 100% | 148% | 149% | 148% | 245% | 233% | 243% |

| Moose | 100% | 98% | 106% | 126% | 129% | 127% | 130% |

| Primes | 100% | 105% | 106% | 118% | 118% | 116% | 116% |

| Regex/Subst | 100% | 111% | 122% | 131% | 138% | 178% | 166% |

| Reg/Subst utf8 | 100% | 104% | 134% | 149% | 132% | 136% | 138% |

| Test Moose | 100% | 95% | 96% | 99% | 99% | 93% | 95% |

| Total | 100% | 105% | 118% | 127% | 130% | 131% | 132% |

I think a fair conclusion is that you should not be afraid of any worsening performance due to newer Perls adding more features to the core, it's the opposite really - especially if you are using Perl older than 5.24 it is probably worth it to upgrade just for the performance.

Of course you should benchmark your own workload as there are special cases. E.g. we found out above that unpack is so much faster in 5.28 onwards - but there could be specific things that are slower which might affect you.

Computer scientist, physicist, amateur astronomer.

Computer scientist, physicist, amateur astronomer.

Thanks for the comparisons. Indeed, perl is getting faster.

Note that there's also Benchmark::Perl::Formance: https://metacpan.org/dist/Benchmark-Perl-Formance . It yields similar results: https://www.reddit.com/r/perl/comments/uf6ujd/benchmarkperlformance_results/ (visualized: https://imgur.com/a/a26EjRn ).

Note that 5.38 will include some nice performance improvements on the very basic level (variable assignment, clearing, anon subroutines etc.). Hopefully these changes will be very visible on the benchmarks. 5.37.5 already includes a lot of them. It hypes me up a lot as someone who loves when stuff run fast.

Thanks for the hint! That's great to know.

But how does Perl behave when compared to other languages like Python? Does https://benchmarksgame-team.pages.debian.net/benchmarksgame/fastest/perl-python3.html make sense, or are there other resources/articles you recommend?

I like the general idea of speeding things up regardless of how it does against other interpreted languages since they were not intended for time-sensitive tasks or really high-throughput; in such cases, I would use the suitable languages for that specific purpose with enough abstraction to the problem (C, C++, Go,...).

BenchmarksGame isn't bad, but its perl comparisons are a bit flawed:

Overall, speed is an important factor. Obviously features are more important, but lately many stuff has been rewritten just to gain some speed. Ack was rewritten in C as Ag, people were moving away from Ranger (Python file manager application) to vifm / lf just for the speed. I hope the trend of making Perl faster continues. Obviously there's a ceiling somewhere, but latest improvements made me very optimistic

perlbrew exec perl -MBenchmark=timethis -e '$s=(join"","A".."Z")x1_000; timethis(-1, sub { my $c= lc $s })' perl-5.37 ========== timethis for 1: 1 wallclock secs ( 1.05 usr + 0.00 sys = 1.05 CPU) @ 192752.38/s (n=202390) perl-5.36 ========== timethis for 1: 1 wallclock secs ( 1.05 usr + 0.00 sys = 1.05 CPU) @ 192752.38/s (n=202390) perl-5.34 ========== timethis for 1: 2 wallclock secs ( 1.08 usr + 0.00 sys = 1.08 CPU) @ 424769.44/s (n=458751) perl-5.32 ========== timethis for 1: 1 wallclock secs ( 1.08 usr + 0.00 sys = 1.08 CPU) @ 72403.70/s (n=78196) perl-5.30 ========== timethis for 1: 1 wallclock secs ( 1.08 usr + 0.00 sys = 1.08 CPU) @ 424769.44/s (n=458751) perl-5.28 ========== timethis for 1: 1 wallclock secs ( 1.10 usr + 0.00 sys = 1.10 CPU) @ 91995.45/s (n=101195) perl-5.26 ========== timethis for 1: 1 wallclock secs ( 1.10 usr + 0.00 sys = 1.10 CPU) @ 91995.45/s (n=101195) perl-5.24 ========== timethis for 1: 1 wallclock secs ( 1.10 usr + 0.00 sys = 1.10 CPU) @ 91995.45/s (n=101195) perl-5.22 ========== timethis for 1: 1 wallclock secs ( 1.04 usr + 0.01 sys = 1.05 CPU) @ 91021.90/s (n=95573) perl-5.20 ========== timethis for 1: 1 wallclock secs ( 1.10 usr + 0.01 sys = 1.11 CPU) @ 91166.67/s (n=101195) perl-5.18 ========== timethis for 1: 1 wallclock secs ( 1.08 usr + 0.01 sys = 1.09 CPU) @ 87681.65/s (n=95573) perl-5.16 ========== timethis for 1: 1 wallclock secs ( 1.09 usr + 0.00 sys = 1.09 CPU) @ 87681.65/s (n=95573) perl-5.14 ========== timethis for 1: 1 wallclock secs ( 1.08 usr + 0.01 sys = 1.09 CPU) @ 87681.65/s (n=95573) perl-5.12 ========== timethis for 1: 1 wallclock secs ( 1.08 usr + 0.00 sys = 1.08 CPU) @ 910222.22/s (n=983040) perl-5.10 ========== timethis for 1: 1 wallclock secs ( 1.22 usr + 0.01 sys = 1.23 CPU) @ 932422.76/s (n=1146880) perl-5.8 ========== timethis for 1: 1 wallclock secs ( 1.04 usr + 0.01 sys = 1.05 CPU) @ 936228.57/s (n=983040) perl-5.6 ========== timethis for 1: 1 wallclock secs ( 1.10 usr + 0.00 sys = 1.10 CPU) @ 735965.45/s (n=809562)