For CPAN Day: Show Off Your Web Framework!

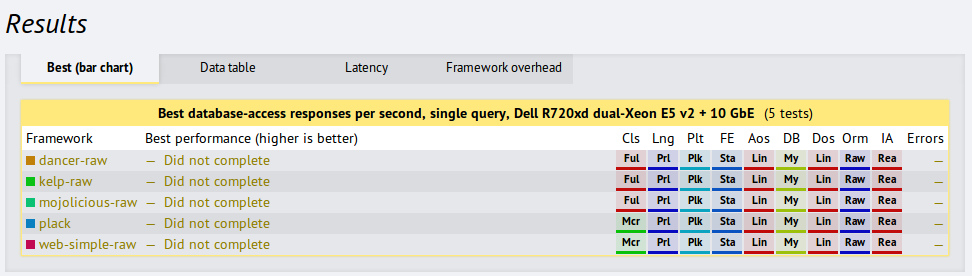

While I know many of you have CPAN Day projects, some of you might still be searching. There is a very well known benchmark from TechEmpower which compares web frameworks. It gets plenty of press and generates much interest. Unfortunately, the Perl results look like this:

We all know the reputation that Perl has to the outside world, and sadly these results would tend to reinforce it. The person or persons who added these apps seems to have long since forgotten about them. At least the Mojolicious app was a port of one of the others and did not exemplify either the style or power of the framework. The others likely share those traits.

But all is not lost! TechEmpower has recently made it much easier to contribute, and I have fixed the deployment and toolchain problems. I have also updated the Mojolicious app. Would you like to improve the submission of your favorite framework or add your own? Read on!

The Repository

To start, clone the GitHub repository. The individual apps live in /frameworks/Perl/. In that directory you will find the files that install module dependencies, start the application, configure the tests and of course the application itself.

Each of the Perl submissions does this slightly differently. I recommend choosing one and copying it. It is fairly self explanatory. In bash scripts $FWROOT, $IROOT and $TROOT represent the paths to the checkout, the installation path (for things like the perl interpreter) and the test app (ie /frameworks/Perl/mojolicious). In python scripts args.fwroot, args.iroot and args.troot do the same. I have tried to fix these and similar deployment problems with the existing perl apps (though some of the args paths are very new and still need to be used in setup.py for several apps).

The Tests

The test itself is several challenges, testing different functionality common across most webapps. The tests are defined here. Since tests have been added over time, not all are required; your app should configure which tests are to be run in the benchmark_config file. The Mojolicious app now implements all of these tests, so you can use it as a guide.

Most of the apps do not implement all the tests. Some that do, do not do so correctly. As I mention in the next section, some of the outputs show fail, even if the net result is pass, so please look carefully. I’m not sure why this is, but for now its what we have.

Travis Integration

Another recent addition is that the tests are available through Travis-CI. This means that you don’t have to deploy the tests on your own hardware, which is (or at least was) a rather challenging task. The test will (or should) detect which app you are improving and skip the others (though that still takes a few seconds per app), which speeds up your testing dramatically. Note that if you are adding a new submission, you should add it to the .travis.yml file.

Also if you fork the repository, be sure to enable it in your own (free) travis account. In this way when you push a changeset, you don’t have to wait for anyone else’s changes to complete their tests. Test this way before sending a pull request and you will get much more accomplished much more quickly.

For those of you improving an existing app, you might want to start by looking at the results of a recent build; tests of the order of double digit hours are complete runs and should contain all the information for each app. Tests that are more like 45 minutes are likely only a single app, which will just say skip for all the others. Some tests appear to pass, but in the logs you can still see failures. I’m not sure why that is, but please take a look. For most apps the error is simply slightly malformed results.

To nginx or Not to nginx

Most of the Perl apps use Starman as an app server and nginx as a reverse proxy. For the Mojolicious app, I removed nginx and use simply hypnotoad (essentially Mojolicious’ Starman). I have debated the choice in my own mind. I removed it because before Travis integration, it made the app much easier to deploy locally. Now that isn’t strictly necessary, but I do wonder if without the benefit of caching (which is against the rules) if removing the middleman is the better choice; Starman and hypnotoad are pretty impressive on their own.

Probably the right solution is to declare two runs, one with and one without it (this can be done in the benchmark_config file). I hope to do that if I have time.

Make Something Cool!

For a start I hope to see apps that pass their declared tests and do us proud. I really hope to see good example apps, well written, easy to understand, and fast. This is a great way to show off Perl outside of the echo chamber!

Happy CPAN Day everyone and happy hacking!

As I delve into the deeper Perl magic I like to share what I can.

As I delve into the deeper Perl magic I like to share what I can.

Thanks for the hand-holding explanation of how to get involved.

In terms of deadlines - Round 10 has finished, they just haven’t got around to dealing with everyone’s pull requests to generate a report.

Hopefully we’ll be informed of the Round 11 deadline here: https://groups.google.com/forum/?fromgroups=#!topic/framework-benchmarks/FZcztfLa8XM