The sculpture shapes the sculptor.

Parenting aint easy. Certainly it is often something your kids teach you. Even worse, it often starts with “<INSERT_KIDS_FRIENDS_NAME>’s dad lets him do <INSERT_CURRENTLY_PROHIBITED_ACTIVITY>”. In this constant battle to shape your offspring into a model citizen, with the values you value, and turning him/her into a self-sustaining organism, one applies tools that enhance particular features, remove the superfluous or the undesirable.

Yet every action, it is said, has an opposite and equal reaction. So it is unsurprising that when one tells a child *not* to do “something”, the natural neural triggers in the child’s brain ensure that that “something” will be done; if one encourages a particular activity for personal development, that task is instantly seen as boring and burdensome, and there is an immediate reflex deployment of many avoidance strategies. The situation is actually worse than it appears. For while you may have some illusion of power to manipulate your child’s development, there is at the same time, a stealthy manipulation of your own choices by your child. While trainers from one store may look, feel and work exactly the same as in another while costing an order of magnitude more, these manipulations transiently distort your own understanding of value, in favour of your child’s.

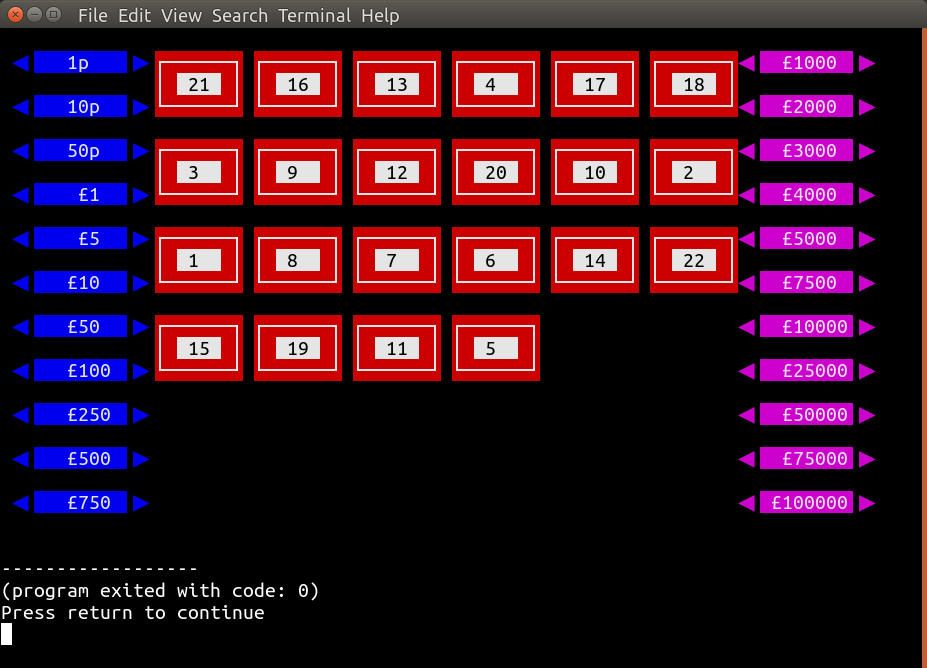

Suffice to say, I am a cheapskate: won’t pay money for CAD tools, (or trainers) and use only Linux. I am constrained in my ability to accept change: won’t use Python and FreeCAD. I try and bend my life to my will. Yet in trying to do so, I change, I waste time, and life goes on as before, impervious to my efforts. Rather than using available tools for developing 3D objects using a GUI, I thought it may be possible to sculpt 3D objects using Perl. I have taken great deal of inspiration for EWILHELM and NERDVANA who have made efforts to bring CAD to Perl programmers. My own recent efforts attempting to twist OpenSCAD (“The Programmers Solid 3D CAD Modeller”) to work with me using Perl are ongoing. I can use Perl to create OpenSCAD scripts fairly easily: CAD::OpenSCAD is the result. This module can generate said scripts and do almost everything that basic OpenSCAD scripts can do, with some Perlish enhancements.

It is helpful for me: I now able to generate complex gears more easily than in my old CAD tools using Perl by leveraging OpenSCAD’s ability to do all the heavy work. But most of these might have been possible using the OpenSCAD scripting language alone, without Perl.

But I needed it to be better. OpenSCAD has limitations. The shapes it uses

are not “self-aware”. I can change an object’s shape, location and orientation, but

can not query that location and orientation. The points and facets of the shape

are not accessible. It is also slow for complex tasks. But the programmer

might be able to create an object that allows these parameters to be accessible.

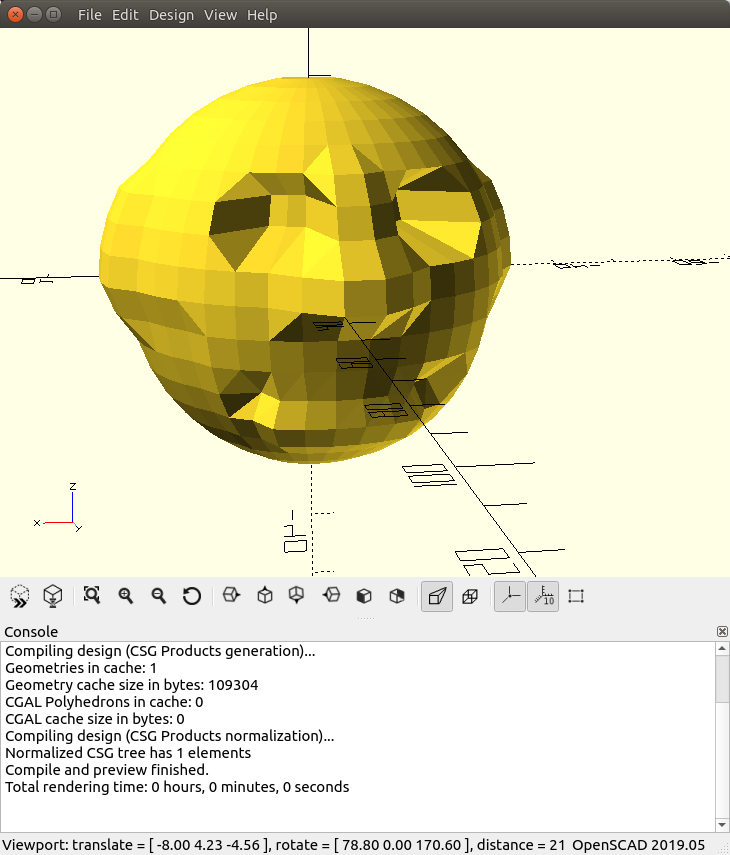

To mould CAD::OpenSCAD into something more useful, I could use Perl to

create all the points and facets of an 3D object, in the form of a Perl Object. These

points can then be manipulated to sculpt the polyhedron, poking dents into that shape,

or pulling part of its surface outwards. The Perl Object can then generate

the 3D script with these modified parameters, for consumption by the hardworking

OpenSCAD backend.

But as I do this I find myself learning about 3D coordinates, facets, structures and their manipulations. The difficulties of the past are replaced by new ones, deviating me from my original goals. Talking about which, can I just ask, will designer trainers really help improve grades? Asking for a friend’s parent.